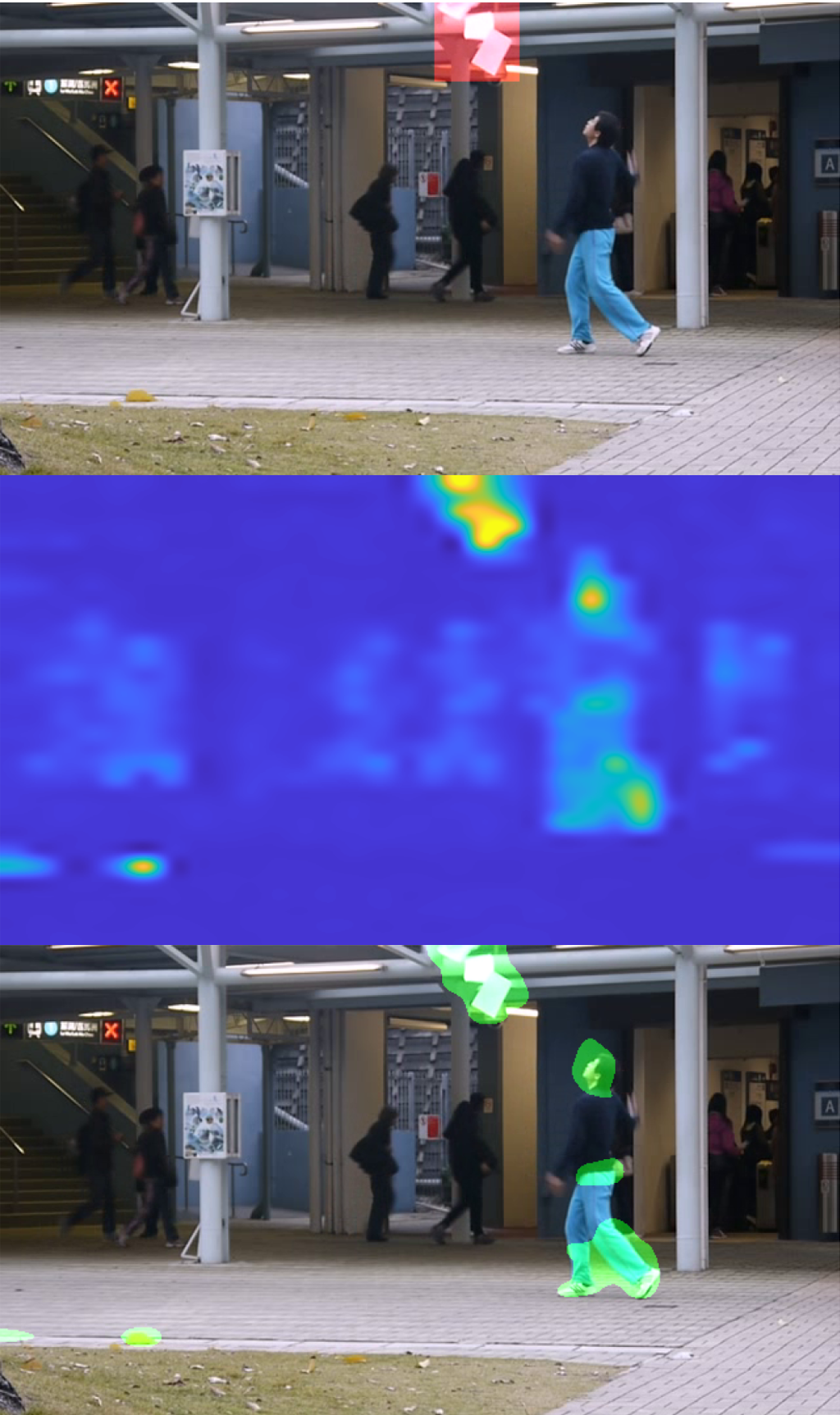

Video anomaly detection (VAD) methods analyze untrimmed videos to make temporal decisions at the frame level to identify abnormal events. An important challenge of VAD approaches is the accurate spatial segmentation of the anomalous regions within frames to provide interpretability of anomalies. This work introduce FUVAS, a fast few-shot unsupervised VAD method ideal for low-data scenarios. FUVAS efficiently identifies temporal anomalies and spatially segments them within each frame of the input video. Our approach harnesses rich video features extracted from pre-trained 3D deep neural networks (DNNs) and performs out-of-distribution detection in the spatiotemporal deep feature space induced by short temporal segments of the video input using low-rank factorization techniques. The proposed approach is agnostic to the choice of 3D DNN backbone architecture and supports both convolutional and transformer models.

We present comprehensive results and ablation studies across popular datasets, demonstrating the quality, computational efficiency, and wide applicability of our method. Our code is publically available.

For more details, please see the paper [ICASSP 2025].